1 AlphaTree

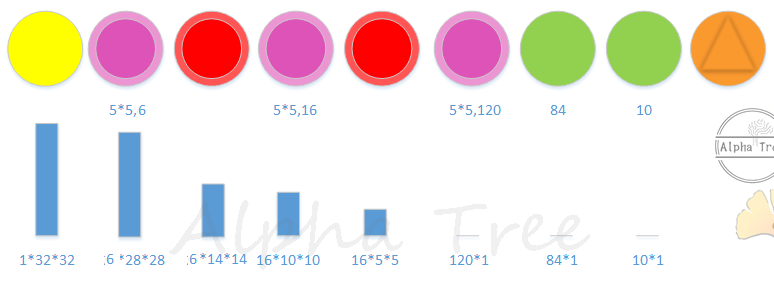

很多论文对算法模型的描述都有自己的风格, 对于我们这些刚入门的小生算是一个大大的挑战, 而我们希望有个大牛能用 一种绘图描绘方式统一描述这些模型, 这个希望被深度神经网络(DNN)与对抗神经网络(GAN)模型总览图示实现了. 这个AlphaTree可贵之处是定义了一套图标, 如下:

Conv + Max:  .

.

止于重复造轮子, 详细的算法模式可以直接进入here.

如果图上再标上所使用的激活函数就更美了

1.1 Object Classification

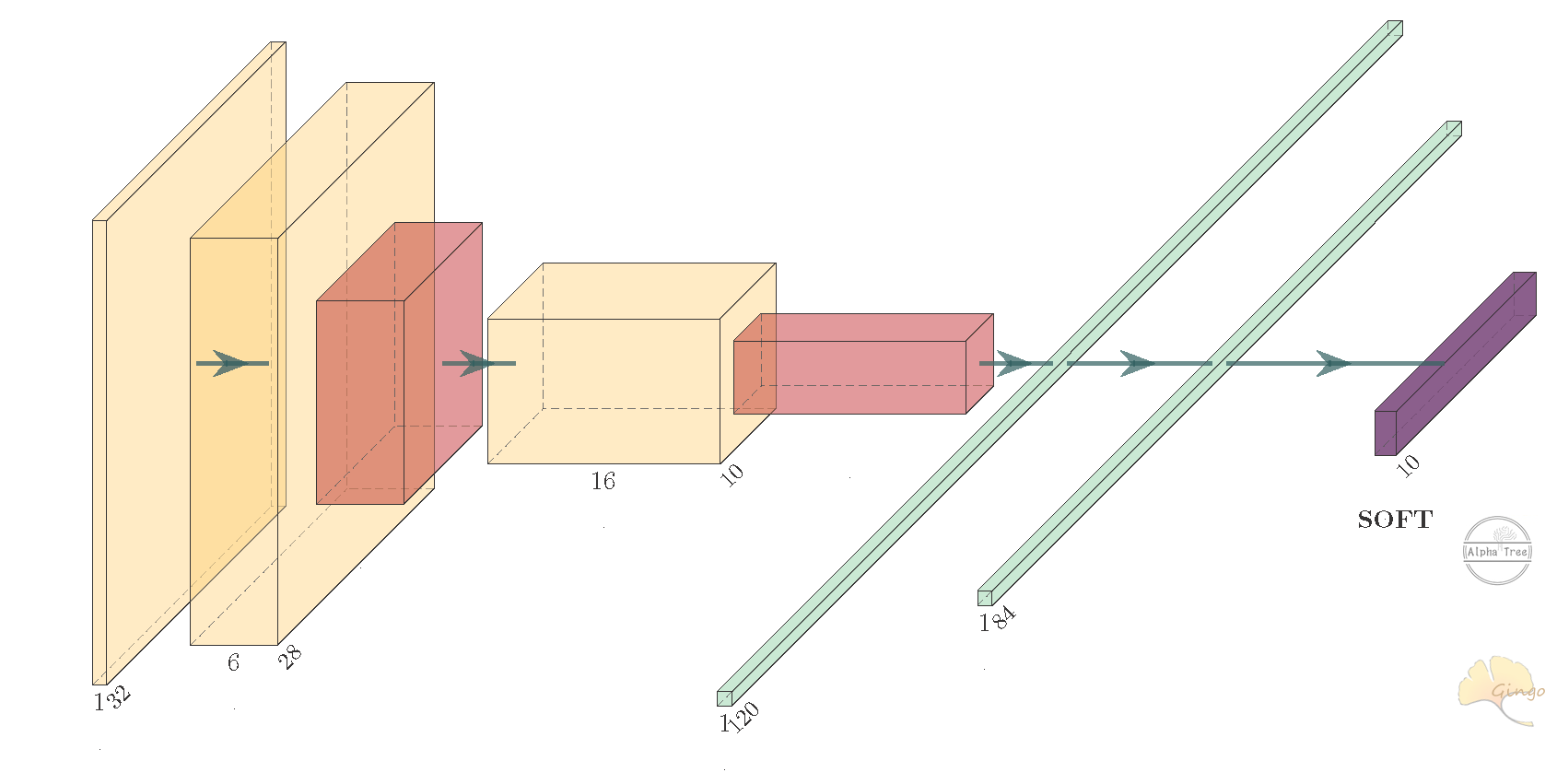

1.1.1 LeNet

模型结构(激活函数From Sigmoid to ReLU)

- 数据变化

see more for details.

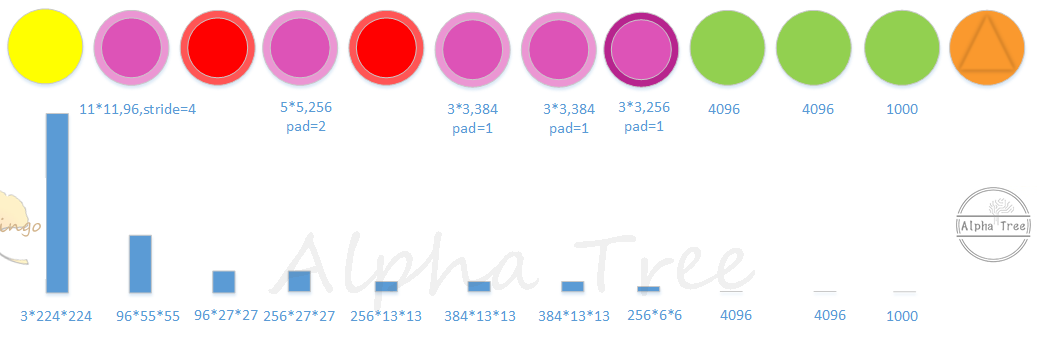

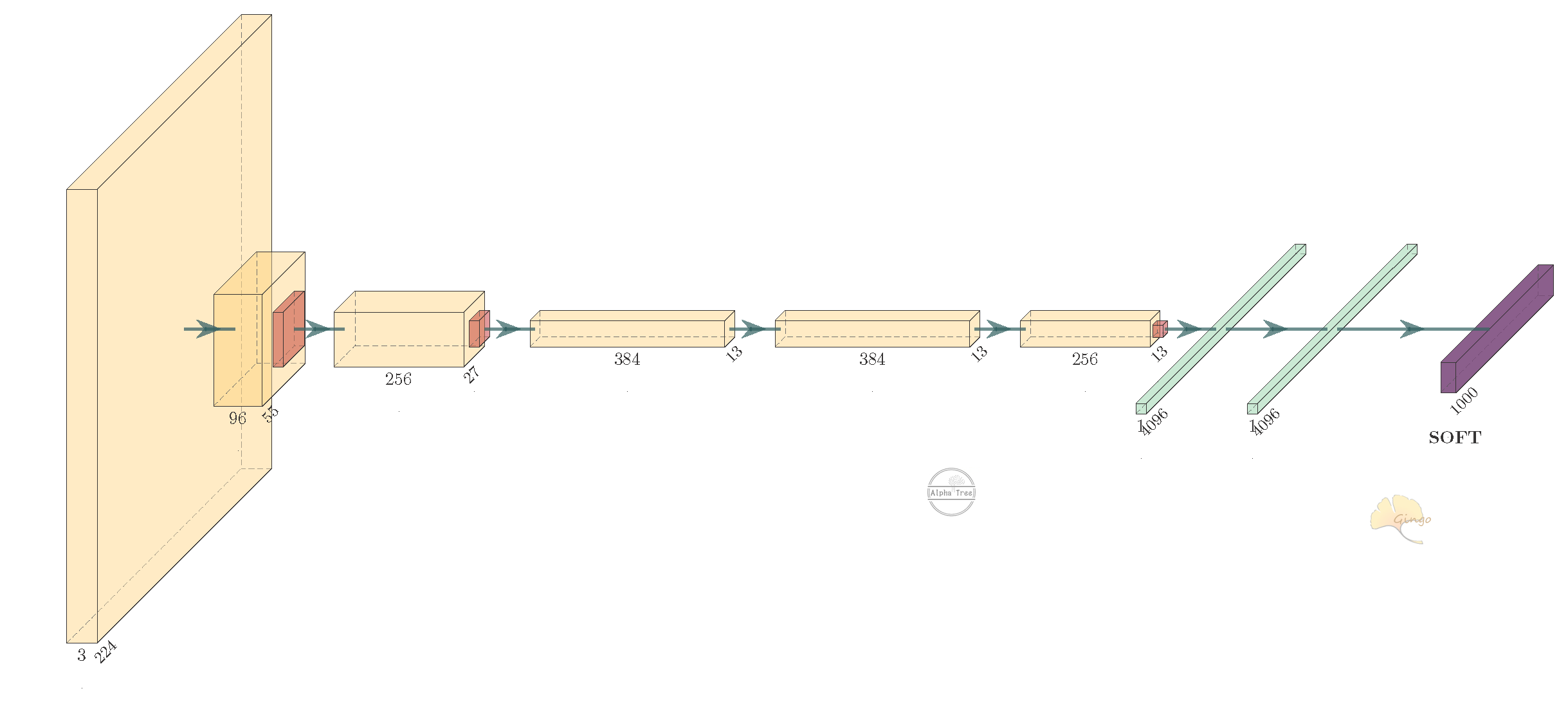

1.1.2 AlexNet

论文"Imagenet classification with deep convolutional neural networks"

模型结构(移除LRN层, 激活函数ReLU)

数据变化

see more for details.

参考代码:

class AlexNet(nn.Module):

def __init__(self, num_classes=1000):

super(AlexNet, self).__init__()

self.features = nn.Sequential(

nn.Conv2d(3, 64, kernel_size=11, stride=4, padding=2),

nn.ReLU(inplace=True),

nn.MaxPool2d(kernel_size=3, stride=2),

nn.Conv2d(64, 192, kernel_size=5, padding=2),

nn.ReLU(inplace=True),

nn.MaxPool2d(kernel_size=3, stride=2),

nn.Conv2d(192, 384, kernel_size=3, padding=1),

nn.ReLU(inplace=True),

nn.Conv2d(384, 256, kernel_size=3, padding=1),

nn.ReLU(inplace=True),

nn.Conv2d(256, 256, kernel_size=3, padding=1),

nn.ReLU(inplace=True),

nn.MaxPool2d(kernel_size=3, stride=2),

)

self.avgpool = nn.AdaptiveAvgPool2d((6, 6))

self.classifier = nn.Sequential(

nn.Dropout(),

nn.Linear(256 * 6 * 6, 4096),

nn.ReLU(inplace=True),

nn.Dropout(),

nn.Linear(4096, 4096),

nn.ReLU(inplace=True),

nn.Linear(4096, num_classes),

)

def forward(self, x):

x = self.features(x)

x = self.avgpool(x)

x = x.view(x.size(0), 256 * 6 * 6)

x = self.classifier(x)

return x

注意:

Here the output channel is 64 not 96, and using zero-padding(2)

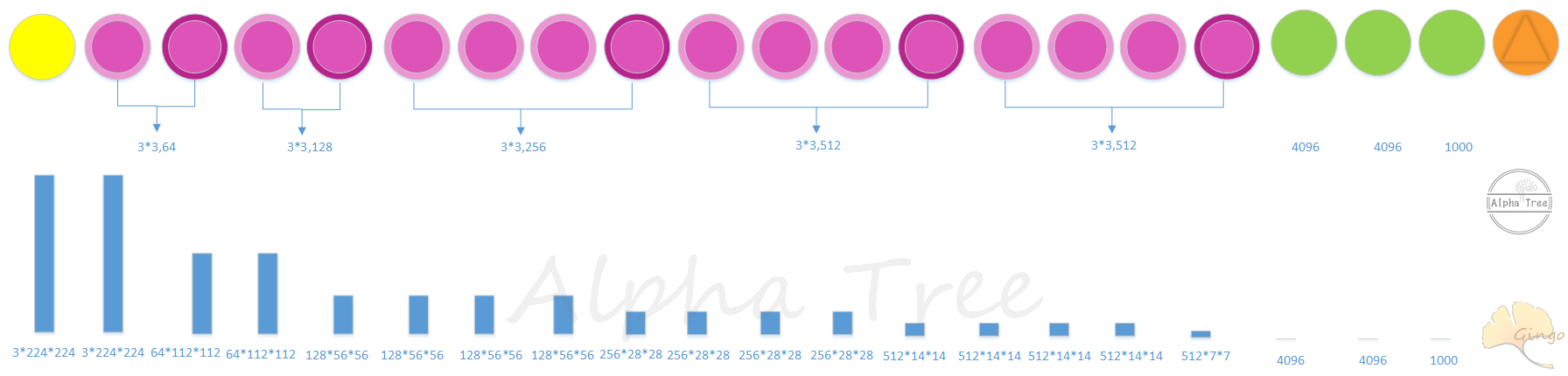

1.1.3 VGG

- VGG19模型结构

see more for details.

参考代码:

cfg = {

"E": [

64, 64, "M",

128, 128, "M",

256, 256, 256, 256, "M",

512, 512, 512, 512, "M",

512, 512, 512, 512, "M",

],

}

class VGG(nn.Module):

def __init__(self, features, num_classes=1000, init_weights=True):

super(VGG, self).__init__()

self.features = features

self.avgpool = nn.AdaptiveAvgPool2d((7, 7))

self.classifier = nn.Sequential(

nn.Linear(512 * 7 * 7, 4096),

nn.ReLU(True),

nn.Dropout(),

nn.Linear(4096, 4096),

nn.ReLU(True),

nn.Dropout(),

nn.Linear(4096, num_classes),

)

if init_weights:

self._initialize_weights()

def forward(self, x):

x = self.features(x)

x = self.avgpool(x)

x = x.view(x.size(0), -1)

x = self.classifier(x)

return x

def make_layers(cfg):

layers = []

in_channels = 3

for v in cfg:

if v == "M":

layers += [nn.MaxPool2d(kernel_size=2, stride=2)]

else:

conv2d = nn.Conv2d(in_channels, v, kernel_size=3, padding=1)

layers += [conv2d, nn.ReLU(inplace=True)]

in_channels = v

return nn.Sequential(*layers)

def vgg19(**kwargs):

return VGG(make_layers(cfg["E"]), **kwargs)1.2 Object Detection

1.3 Object Segmentation